tW: Write So Others Can Verify

Rewrite "we did X" statements into concise, verifiable, and replicable methodological descriptions with transparency.

From “We Did” to “You Can Verify”

This edition of thesify weekly, we are zooming in on a simple writing shift that makes your research easier to trust and easier to build on. When you replace broad, high-level statements with a few concrete specifics (what you used, how choices were made, and where key materials can be accessed), you give readers what they need to verify your process without filling in gaps for you. This week’s blog shows how thesify’s new Methods-specific feedback surfaces the most common “missing detail” points, so you can revise quickly and keep your prose lean.

This Week’s thesify Feature to Try: Theo’s Digest Main Claims

Verification starts with a basic question: what, precisely, is the paper claiming? If the claims are vague or overgeneralized, readers cannot reliably check the logic, assess the evidence, or reuse the work. Paper Digest’s Main claims feature isolates the central assertions of a paper into a short list, giving you a practical starting point for making research outputs more checkable, whether you are evaluating someone else’s paper or refining your own.

thesify’s Digest clarifies what the authors are asserting by extracting the paper’s main claims.

How to Find and Use “Main claims”

Open Digest, expand Main claims, and use the extracted assertions as a starting point for verification.

Upload a Scientific Paper to thesify

Navigate to Digest in the right side feedback

Expand the Main claims.

Read the claims as an evaluation checklist, not a summary:

Are key terms defined (population, variables, outcomes, comparison conditions)?

Are the claims appropriately bounded (scope, context, limitations)?

Could a reader identify what evidence would confirm or challenge each claim?

Two Practical Ways to Apply Theo’s Digest’s Main Claims This Week

1) When You Are Reading or Evaluating a Paper

Use Main claims to separate what the paper asserts from what it shows. For each claim, locate where support should appear (results, figures, tables, appendices, or cited sources). If you cannot trace a claim to evidence, you have found a verification gap worth noting.

Digest tab with Main claims expanded, listing claims about CPS referrals by race, socioeconomic status, and provider bias.

2) When You Are Writing Your Own Draft

Use Main claims as a diagnostic for clarity and verifiability. Generate a claims list from your abstract or key sections, then revise any claim that a reader could not verify without inference. Typical fixes include specifying what was measured (and in whom), clarifying decision rules, and noting where key materials can be accessed.

Use Main claims as a quick checklist to pressure-test whether your assertions are specific and verifiable.

A Fast “Make It Verifiable” Routine

Step 1: Copy the Main claims list into your notes.

Step 2: For each claim, write one line: “A reader could verify this by checking ___.”

Step 3: If you cannot complete that line cleanly, revise the claim (or the supporting detail) until the verification path is explicit.

Your Weekly Tips: Write Methods Readers Can Follow

1) Replace “We Did X” With a One-Sentence Procedure Statement

When you write “we analyzed,” “we assessed,” or “we used,” add the minimum specifics that make the step replicable:

Input (data/materials, sample, corpus)

Method action (what you did, in concrete terms)

Output (what the step produces, such as a metric, category, model estimate)

Example pattern: “We [action] [input] using [approach], producing [output] used in [next step].”

When methods specify inputs, measures, and analysis steps, readers can trace how decisions connect to outcomes.

2) Make Your Decisions Explicit at the Exact Point They Occur

Readers cannot verify invisible choices. Add a short clause wherever a decision changes what counts as evidence:

inclusion/exclusion and screening logic

thresholds/cutoffs and coding rules

missing data/outliers and any transformations

Keep it tight: state the rule, not a long justification.

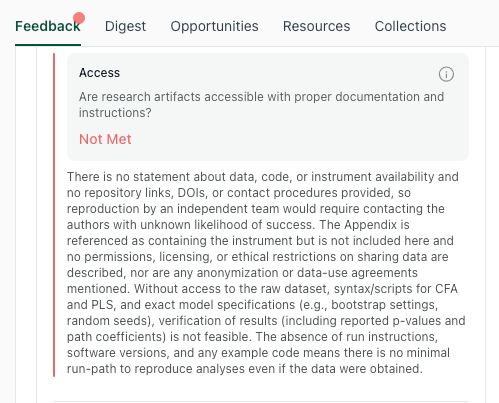

3) Add an Access Line for Anything a Reader Would Need to Reuse the Work

Verification is often blocked by “where is that?” Add one sentence that points to:

instruments, protocols, codebooks, templates, or analysis scripts

supplementary files or repositories

access constraints (and what you provide instead)

thesify’s Access check flags when readers cannot identify where materials live or how analyses could be reproduced.

This is the fastest way to make your workflow checkable without expanding the Methods unnecessarily.

4) Run a “Could Someone Else Reproduce This Step?” Pass

Do one targeted read-through focused only on verifiability. For each key step, ask: Could a reader do this again without guessing?

If the answer is no, add one of the following (only one, as needed): a missing definition, a decision rule, a parameter, or an access pointer. This keeps the prose lean while closing the most common “missing detail” gaps.

This Week at thesify: thesify Survey 2026

Take the thesify survey 2026 (5 to 7 minutes) to help us improve how thesify supports clear, transparent research writing. Your input directly informs what we prioritize next.

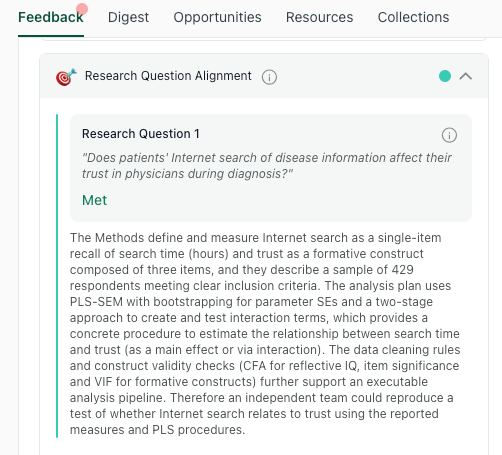

This Week’s Blog: Methods Section Feedback: Inside thesify’s Methods Rubric

If you want Methods feedback you can act on quickly, this post breaks down how thesify’s rubric-based Methods report identifies the exact places your draft becomes hard to follow, evaluate, or reproduce. You will see what each rubric category covers (Experimental Design, Detail and Replicability, Research Question Alignment), what “missing detail” typically looks like, and how to revise with targeted, revision-oriented suggestions.

Related Resources

thesify Weekly Newsletter Archive: Think of the archive as a set of targeted interventions for the exact moment you are stuck. Each edition isolates one concrete workflow move, like sharpening a claim, strengthening traceability, tightening a section, or addressing a predictable objection, and then gives you prompts and quick checks to execute the fix without rewriting your whole draft. Open a single issue for a quick course-correction, or follow a short sequence of related editions to systematically strengthen a draft that is close, but still not holding up.

Methods, Results, and Discussion Feedback for Papers: This post shows you what reviewers typically flag in these three sections, and how section-level feedback can help you revise faster and with more precision. It also draws on a real example of the kinds of Methods, Results, and Discussion comments that thesify can now generate in its latest feedback reports. The article also provides a one-pass revision workflow you can use this week in your writing; plus, how to re-run your report after revisions to ensure that any flagged issues get resolved. We also cover FAQs on Methods, Results, and Discussion feedback.

How to Evaluate Academic Papers: Decide What to Read, Cite, or Publish: Learn more about smarter reading and writing with PaperDigest, including strategies on how to use the PaperDigest feature to help researchers at every stage: deciding what to read, ensuring your understood it, choosing what to cite, sharing knowledge, and refining your own manuscripts. For instance, maybe you overlooked a critical experiment or a stated limitation; if the digest’s “Main Claims” or “Conclusion” section brings up something unfamiliar, you know your understanding wasn’t complete.

AI Tools for Academic Research: Criteria & Best 2025 Tools: As AI becomes ubiquitous in research, our responsibility is to use it wisely. Not every AI application marketed to students meets academic standards. By applying criteria for transparency, reproducibility, privacy, hallucination control, attribution, policy alignment, bibliographic quality and methodological rigor, you can distinguish between tools that merely churn out text and those that truly support scholarship. Outrank listicles with our academic AI tool guide. We define ‘academic’ AI, compare top tools and show how thesify upholds research integrity.

Get Section-Level Feedback Before You Submit

Ready to catch design oversights, reporting gaps and overclaims before your next submission? Upgrade today, upload your manuscript to thesify, and explore the new section‑level feedback feature. It’s a simple step that can save you time and strengthen your paper’s credibility.

Need more insights? Visit our full blog archive or newsletter archive for expert advice on academic writing.

Until next time,

The thesify Team